Online shopping is becoming increasingly common, and a variety of products can be obtained without seeing the real thing. However, it is difficult to judge whether clothing will suit you based on product images alone, which makes it necessary to develop systems that allow people to easily try things on virtually. However, although existing virtual dressing room systems are highly versatile, it has been difficult to generate high-quality images of clothing in real time.

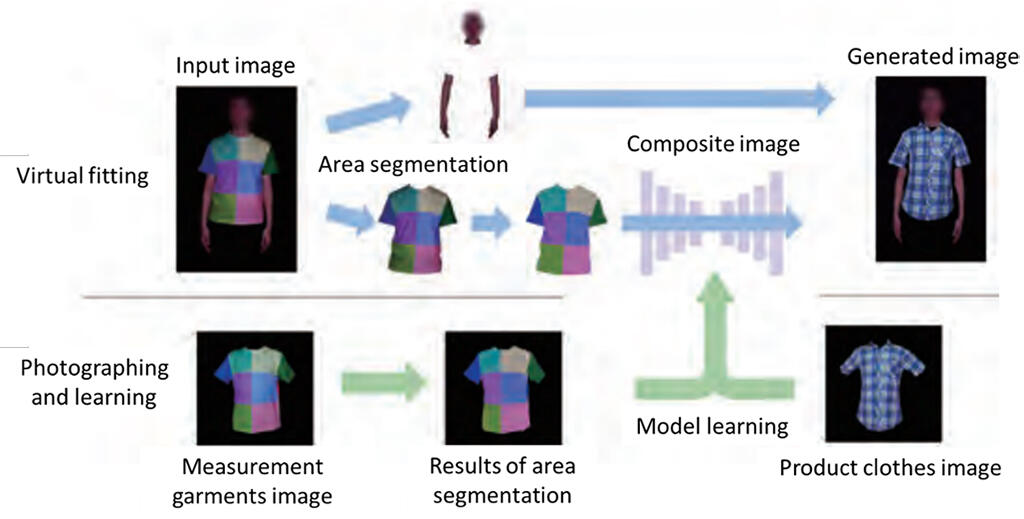

Professor Takeo Igarashi and his colleagues at the Graduate School of Information Science and Technology, the University of Tokyo, have developed a robot mannequin dedicated to training data photography that can automatically control body shapes and attitudes, putting measurement garments on the mannequin and taking photographs from a variety of angles and in varied poses. The measurement garments are roughly color-coded by body part and can be labeled by based on color information. From this process fitting is obtained. By having the program learn a large volume of images of the mannequin wearing clothes to be tried on and measurement garments, the group succeeded in constructing a deep learning model that can deal with all kinds of poses and body shapes.

When synthesizing images with the clothing to be tried on, simply taking a picture of the clothes with a camera with a depth sensor they will change in real time, matching the users pose and body shape, thereby realizing a more realistic dressing room experience. In the future, the group will improve the mannequins and optimize the measurement garments, and work to bring this system to the market as a virtual "try-on" service for online shopping and videoconferencing.