The research group of Professor Mayu Yamada, specially appointed researcher Hirono Ohashi, Professor Koh Hosoda, Assistant Professor Shunsuke Shigaki from The Department of Systems and Control Engineering, Osaka University, Graduate School of Engineering Science, and Daisuke Kurabayashi from the Tokyo Institute of Technology has succeeded in clarifying for the first time that efficient olfactory search behavior requires multi-sensory information integration.

Although many studies have been conducted to artificialize the excellent adaptability of living things, robots that behave like living things in all environments have not yet been created successfully. The reason for this is that the kind of information received from the environment and that reflected in the behavior when the organism is exposed to various environments cannot be clarified. By applying virtual reality (VR) technology to insects, the research group was able to acquire behavioral changes when the environment presented to insects was changed slightly, while giving the illusion that they were moving in the real environment.

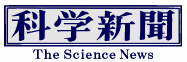

In order to elucidate the adaptive olfactory search behavior of insects, the research group constructed a VR system for insects that can simultaneously and continuously present multiple types of environmental conditions (odor, wind, and light). Using the VR system, they also investigated how male silk moths integrate information when searching for female silk moths. Through biological analysis, it was clarified that odor and wind information contributed to the speed adjustment of walking / rotational speed, and visual information contributed to posture control. Furthermore, a model was constructed from the biological data and the insect function was evaluated by simulation.

As a result, it was found that the search success rate from the new model was higher than that of the odor-source search model proposed so far, and that the search trajectory was similar to that of living organisms. According to Assistant Professor Shigaki, "Insects change their behavior because of subtle differences in sensory stimuli. Therefore, it was very difficult to determine the strength and speed of the sensory stimulus to make the insects feel as if they were walking in a real environment. In the future, we would like to create a model for operating the robot using the data of biological experiments obtained with VR for insects, and to establish a robot system that can accomplish the work even in a harsh environment."

Provided by Osaka University

This article has been translated by JST with permission from The Science News Ltd.(https://sci-news.co.jp/). Unauthorized reproduction of the article and photographs is prohibited.