On November 8, Nippon Telegraph and Telephone Corporation (NTT) announced that they had developed an input interface that allows severely disabled persons with limbs to connect their remaining muscle movements to operational commands in the metaverse. The avatar and game operations that use the technology were exhibited and introduced at the NTT R&D Forum 2023 IOWN ACCELERATION on November 14th to 17th.

Provided by NTT

R&D of technologies that support people with severe physical disabilities in communicating their intentions is progressing worldwide, and the main targets of these technologies include brain-signal input based on the waveforms from electroencephalography (EEG) and visual line sensors. NTT is promoting 'Project Humanity', which aims to solve problems from the perspective of human-centered design, including not only people with disabilities but also those who support them. As part of these activities, NTT is conducting research and development with the aim of creating technology that will enable rich communication for people with severe physical disabilities and enable them to connect more deeply with society.

In this study, NTT focused on a surface electromyography sensor (sEMG sensor), which has a deep relationship with physical movement, although there are few cases studies using the technology. Using the sensor, they developed an input interface that utilizes sEMG (the action potentials generated when muscle fibers contract) to connect the few remaining muscle movements to operational instructions in the metaverse. This is a novel body-augmentation technology that extends nonverbal expression to the metaverse and the operation of the information and communication technology (ICT) devices.

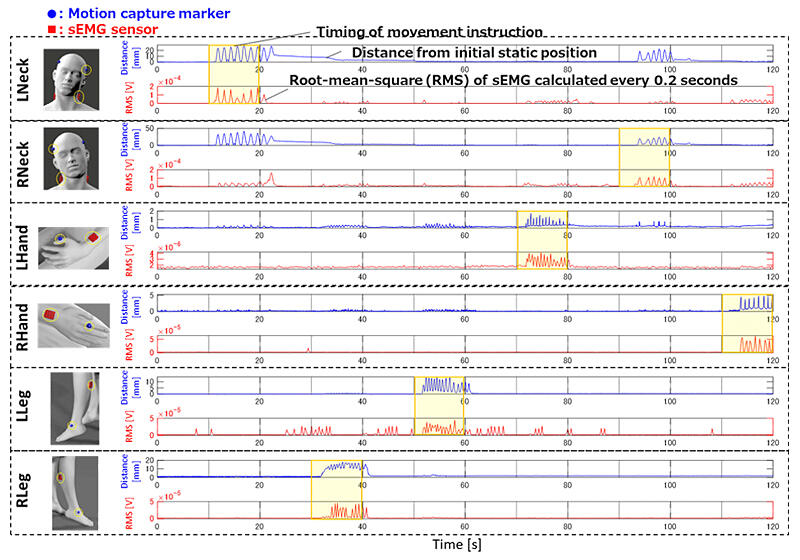

If a muscle is moved slightly, sEMG can be measured using a sensor. Upon observing the body position information and the sEMG results that contributed to a slight body motion in a person with amyotrophic lateral sclerosis (ALS), it was revealed that the body motion of even a few millimeters elicited a response in the sensor. However, owing to differences in individual disabilities, the body parts that can be used must be determined based on the physical conditions of patients.

When a patient intentionally tries to move a body part, the muscles of the other areas may also react. Although an ALS symbiont person moved the instructed body part, the muscle movements of other body parts were observed in some cases. In technologies that extract information from muscles of the intended body parts, the following two points are essential: (1) calibration to set the reference value of sEMG to the relaxed state of resting muscles, and (2) setting of threshold values used for motion. Using this technology, NTT set the reference value as the resting state immediately after a melody was heard on the DJ performance stage of the ALS symbiotic person, and the threshold was adjusted to the strain state during the actual DJ performance.

An additional point to be considered was the muscle fatigue when converting continuous sEMG, which was measured using a sensor, into operational instructions in the metaverse. Because a severely disabled person has insufficient muscle strength and endurance, it is necessary to perform the intended commands in the metaverse by using the movements of the body sparingly. To achieve this, they avoided operating commands that require muscles that continuously contract for a long time or that vary the intensity of muscle contraction, and so decided to determine each operating command according to the muscle contraction judgment of each body part.

The avatar motion corresponding to each operation command was set to be reflected for a certain period of time, and when the same operation command was repeated, the reflected avatar motion time was extended. An ALS patient who actually used this technology felt that the avatar was moving as they intended and that they could perform the avatar's movements through their own body movements.

NTT plans to improve the input interface with a technology that can adapt to the degree of disability by the end of FY 2024.

This article has been translated by JST with permission from The Science News Ltd. (https://sci-news.co.jp/). Unauthorized reproduction of the article and photographs is prohibited.