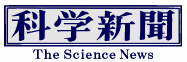

A research group led by Graduate Student Kotaro Yamashiro and Professor Yuji Ikegaya of the Graduate School of Pharmaceutical Sciences at the University of Tokyo announced that they constructed a system that records local field potentials from the cerebral cortex of rats and let artificial intelligence (AI) draw illustrations using this information. They utilized a latent diffusion model, a type of generative AI capable of generating images from noise, with neural activity as the noise component. The genre of the image can be freely set by changing the model used. This is the world's first drawing system combining brain activity and AI. The results were published in the international journal PLOS ONE on September 7.

Provided by the University of Tokyo

"Stable Diffusion" was released by Stability AI in August 2022 as an open-source product that outputs an image corresponding to a sentence entered, for example, "an astronaut riding a horse." Unlike previously reported models for the text-to-image service, this model is open to the public and can be customized. For the constructed system, they created a framework by modifying the Stable Diffusion model to allow for direct real-time input of local field potentials recorded from the rat cerebral cortex. Because the diffusion model learns to reconstruct the original image by removing added noise, it can reconstruct the image from pure noise.

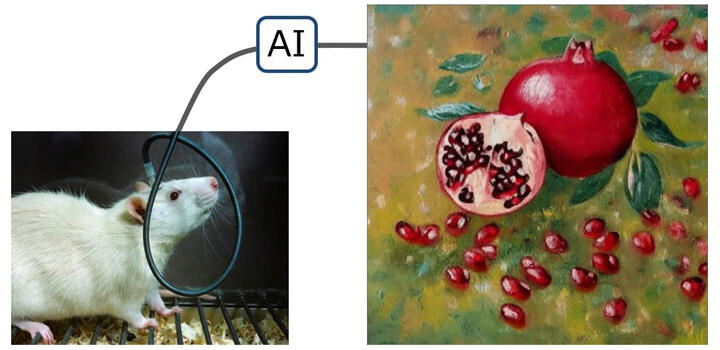

During the learning process, the diffusion model learns the features of a sample image set, and by inputting random noise, such as Gaussian noise, into this model, a completely new image consistent with the style of the image used in learning can be generated. The models to generate images from text, such as "Stable Diffusion," use the input text as a guide to reconstruct images from noise so that the reconstructed images are in accordance with the text. The model to generate images from sentences receives two types of inputs, noise as the source of generation and textual input for conditioning, and generates images from the noise according to the sentences. At the same time, images that depend solely on the input noise can also be generated by deliberately omitting conditioning through textual input.

In this system, no textual conditioning was applied, and local field potentials recorded from the rat cerebral cortex were input instead of noise as the source of the image. Local field potentials are recordings of signals emitted by neurons and are time series data in the form of waves. They cannot be input into the model as is, so they were compressed to fit the data format.

If the content of the textual input for conditioning can reflect the internal state of the rat, it is expected that images can be generated according to the rat's "mood," such as an image with a bright atmosphere when the rat is interested or a quiet atmosphere when the rat is sleepy. In principle, the developed method can be applied to not only neural activity but also any time series signal, including many biosignals such as heart and bowel peristalsis and natural phenomena such as wind and waves.

Ikegaya said, "The attempt to utilize mouse brain activity for painting may seem absurd at first glance, but it is an innovative experiment that not only suggests new possibilities for image-generating AI but also dramatically expands the realm of artistic expression. Our future plans include application of this technology to human biological signals."

Journal Information

Publication: PLOS ONE

Title: Diffusion model-based image generation from rat brain activity

DOI: 10.1371/journal.pone.0309709

This article has been translated by JST with permission from The Science News Ltd. (https://sci-news.co.jp/). Unauthorized reproduction of the article and photographs is prohibited.