Light rays that flow through space include higher-dimension visual information, such as angles, wavelength and time, but ordinary cameras can only capture flat images. With regard to this, Professor Yasuhiro Mukaigawa of the Graduate School of Science and Technology, Nara Institute of Science and Technology, has succeeded in obtaining images that include a wealth of visual information by combining optical devices that measure light on diverse axes and information processing technology. It is expected that these technologies, which make it possible to gain a deep understanding of photographic scenes and grasp things that cannot be seen, will be applied to industry.

The diverse information of light vanishes in 2D images

Since wooden box cameras appeared in the 1800s, people have made many improvements in their attempts to refine clearer scenes with cameras by combining a variety of lenses and materials. In recent years, miniaturization and high performance have advanced even further, and anyone can easily take beautiful photographs. "Camera images are used everywhere, including autonomous driving and AI diagnostic imaging, but images don't capture all the information," notes Professor Yasuhiro Mukaigawa of the Graduate School of Science and Technology, Nara Institute of Science and Technology.

Both people and cameras "see" by processing the light information that comes in through their lenses. Light that flows through space contains information on passage positions, angles, wavelengths, time and the state of polarization. This is delivered to human eyes and cameras while light phenomena such as reflection, refraction and scattering occur. In other words, it could be said that light is a medium that carries information.

However, even though humans and AI take in the same light, humans can judge the material and qualities of an item in an instant, while it is difficult for AI and other technologies to discern this information from 2D images. "This is because 2D images lose the multi-dimensional information of light. But, if we can accurately capture the necessary information, it will enable a deeper understanding of a scene from an image," says Mukaigawa.

In the case of autonomous driving, if the systems involved could accurately extract the necessary information from road signs, even in bad weather such as fog or rain, safety would greatly increase. Or, if doctors at a hospital were able to immediately confirm the state of the subcutaneous blood vessels without using large-scale equipment, it would surely help them to draw blood and make diagnoses. From this background, Mukaigawa started the CREST program Joint Design of Encoding and Decoding for Plenoptic Imaging (Figure 1) to develop technology that enables special photography that "sees only what we want it to see" and "sees what cannot be seen."

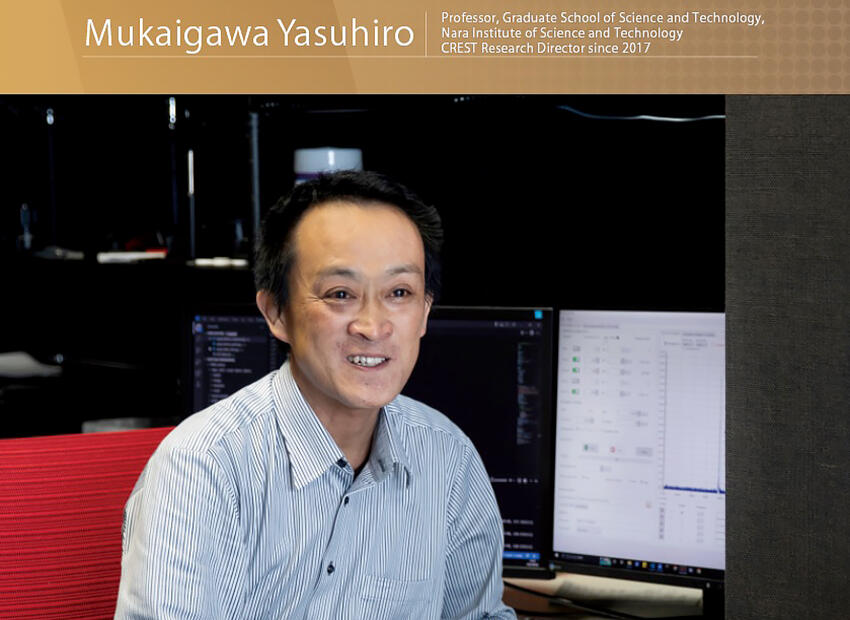

Figure 1: CREST team structure

The project team is divided into four groups, with the Measurement Group led by Professor Mukaigawa measuring plenoptic information and creating an information-science model of the photography process. Professor Yasuyuki Matsushita of the Graduate School of Information Science and Technology, Osaka University, is responsible for algorithms design for higher-dimensional data analysis and software development with the Analysis Group. Associate Professor Kenichiro Tanaka of the College of Information Science and Engineering, Ritsumeikan University, is engaged in interdisciplinary applications of plenoptic information and optimization that suits these applications in the Specific Application Group. Associate Professor Hiroyuki Kubo of the Graduate School of Engineering, Chiba University, is engaged in combined applications of plenoptic information and optimal visualization in the Combination Application Group.

These four groups are working with each other, combining the optical design of measuring devices and calculation algorithms in the field of information science with the aim of realizing higher-dimension optical imaging through the cooperative design of both areas. In other words, they are developing both optical devices and image processing technology that will make the invisible visible as they strive to obtain unprecedented images.

Fighting by changing perceptions without following trends

Mukaigawa is processing images that include the target multi-dimensional information on computers, but in the world of research on image information processing, the trend is for a research style that enables deep learning about vast amounts of 2D images. In the past, it was difficult to gather images that could be studied on a computer, but more recently researchers can obtain any number of these via the Internet. This is the main factor behind this trend. It's amazing that research on image processing is now able to go ahead even without a camera.

With regard to this, Mukaigawa uses the phrase, "We are not following trends. We are taking on challenges on the global front lines by changing perceptions." He is carefully continuing to capture images with large quantities of information using the measuring devices he and his colleagues have devised. "As long as images with little information are used as materials, as they are in the current deep learning, we'll only obtain outcomes that are extensions of past approaches. We want to come up with entirely new, unprecedented outcomes by increasing the amount of information in an image," he emphasizes.

Initially, the project measured light rays on three axes (wavelength, viewing position and time in nanoseconds) to greatly increase the dimensions of the information included in an image, establishing guidelines for the extraction of the latent visual data that had not been used in the past. However, the higher the dimensions, the greater the time and cost to measure them. There was also increased noise in the data obtained, so complex computing was needed for the vast amounts of data. On top of this, there were physical and optical limits to the performance of the measurement devices.

Thus, Mukaigawa and his colleagues revised their camera photography principles from the bottom up, and designed a photographic method based on the assumption of image processing that combines the optical design of a measurement device with calculation algorithms in the information science field. "There isn't much computer vision research that uses information captured by special cameras, and crossing measurements and information also had the advantage of increased originality," he said as he explained their goals.

One of their research outcomes based on this approach is the photography of objects in cloudy water. A normal camera would only be able to capture indistinct images, but this scene contains light transmitted straight through the water from a light source, and scattered light. The light that hits the object in the water is not transmitted, so contriving to retrieve only the light that is transmitted in a straight line means the object appears as a shadow (Figure 2). This is of course physically impossible, so the group designed a measuring method that works backwards to reproduce this image later on a computer.

Figure 2: Measurement technology that distinguishes the transmission and scattering of light

Developing technology to visualize subcutaneous blood vessels and contactless touch panels

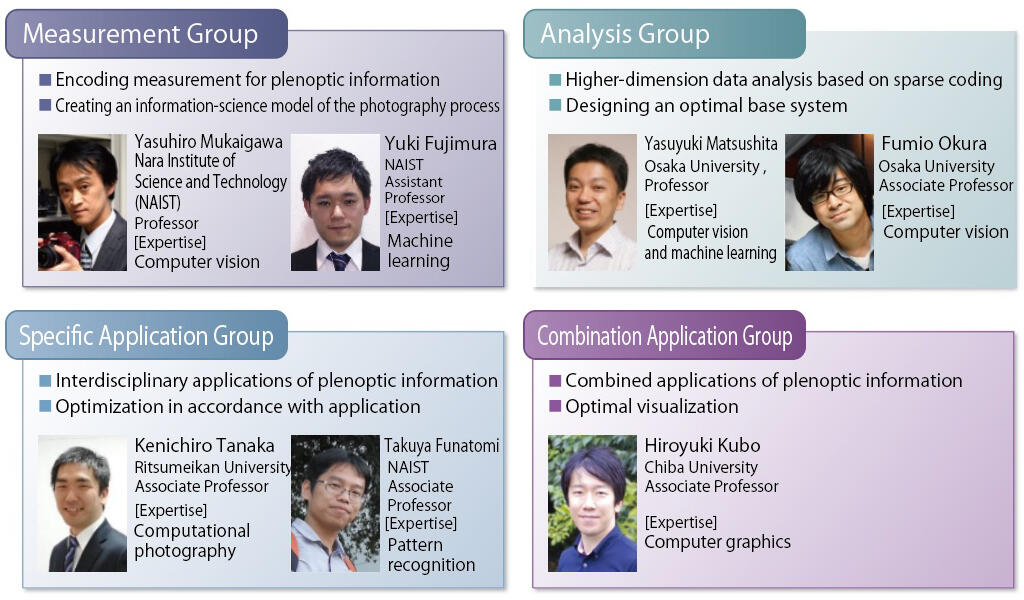

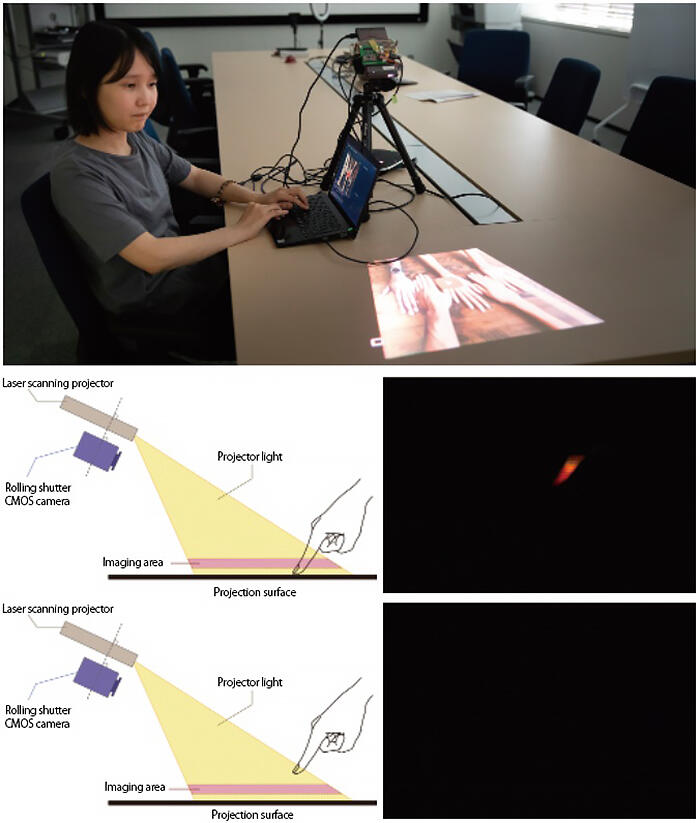

Using this kind of technology, which can differentiate between and extract light, it is possible to view subcutaneous blood vessels. When light is irradiated onto the skin from a light source, the majority of the light is reflected off the surface of the skin, but a small part penetrates it and scatters. Consequently, a tiny gap is formed between the area where light hits the skin and the area from where light exits the skin after reflecting internally. Mukaigawa and his colleagues focused on the scattered light that passes through the inside of the skin, and constructed equipment with a laser scanning projector and rolling shutter camera in parallel to measure this light (Figure 3).

Figure 3: Contactless visualization of subcutaneous blood vessels in real-time

More specifically, they intentionally incorporated a short delay time of less than 1 millisecond in the timing between the light ray irradiation and the photography, allowing them to shift the location photographed by the camera away from the location irradiated by the light ray and selectively capture only the scattered light that passes through the inside of the skin rather than the light reflected from the surface of the skin. As this process is unlikely to be affected by external light, they were able to use this technology in a room with regular lighting. In contrast to X-rays, there is little effect on the person's health. "As this enables us to see the subcutaneous blood vessels in real-time without contact, we believe it can also be used to take blood from or give injections to the elderly and children, whose blood vessels are thin, and to diagnose illnesses in which the shape of the blood vessels is altered," says Mukaigawa.

If the light that has gone a little under a surface can be captured, perhaps something could be done by measuring the light slightly above a surface. From this concept, the group created touch-sensing technology that can manipulate an image projected by a projector with a fingertip without a time lag (Figure 4). Previously, the entire image projected by a projector was captured by another camera, which detected the position of the fingertip. However, this method made it difficult to pinpoint the location of the fingertip. Other issues included an inability to differentiate the user's hand from a hand in the projected image, and the time taken for image processing.

Figure 4: Touch-sensing technology that manipulates a projected image using a fingertip

Thus, Mukaigawa and his colleagues created equipment that combined a projector and a rolling shutter camera, on the assumption that computer processing would be used. The entirety of the images taken with the camera does not fit into the viewfinder. They are limited to the space a little above the surface needed to determine whether a finger is touching. In other words, they are limited to a depth of a few centimeters parallel to the surface. When a finger touches the surface, part of that finger is captured, and when it does not touch the surface it is not captured - this is the mechanism behind the system.

Using this method, it is possible to determine whether a finger is touching the image with a single camera. As this only detects the presence of a fingertip, the processing time is vastly reduced. The group has realized a comfortable operability similar to the time it takes to operate a smartphone. "As this research can control an area for touch sensing, it can also be applied to touchless operations. If we can ensure contactless operation, it could be effective for infection control measures," Mukaigawa commented with expectation.

Archiving stained glass at World Heritage sites using spectral imaging

The project has led to major outcomes as each team works closely together to advance the design of measuring devices and information processing. Notably, when the project was launched, the group wanted to value the CREST ideology, and so they scheduled regular meetings between members, held discussions and training that included students, and emphasized sharing approaches with each other.

However, their research was temporarily suspended due to the spread of COVID-19, and the number of meetings dropped off. This may have seemed terrible from an outside perspective, but Mukaigawa recalls that this adversity yielded new developments. "By gaining a little distance from each other, each member came up with their own new ideas and tried them. Since the concepts were shared, this meant we freely developed new directions."

They added four more axes to the initial three of wavelength, viewing position and time in nanoseconds: time in picoseconds, lighting angles on subjects, far-infrared wavelength, and the time difference between illumination and observation scanning. Using these on their own, or in combination further widened the range of applications.

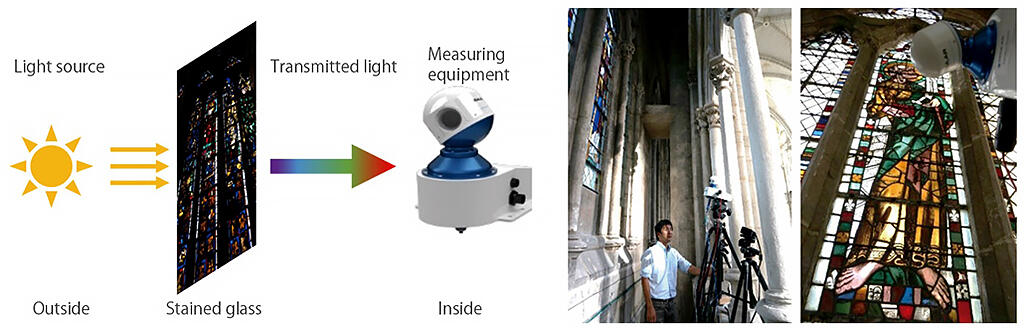

One outcome was the digital archiving of the World Heritage site Amiens Cathedral, which took place as part of a French project for the highly accurate digital archiving of its architectural heritage. This was accomplished via joint research with the French Université de Picardie Jules Verne (UPJV). UPJV was responsible for the architectural forms and Mukaigawa and his colleagues were responsible for the stained glass.

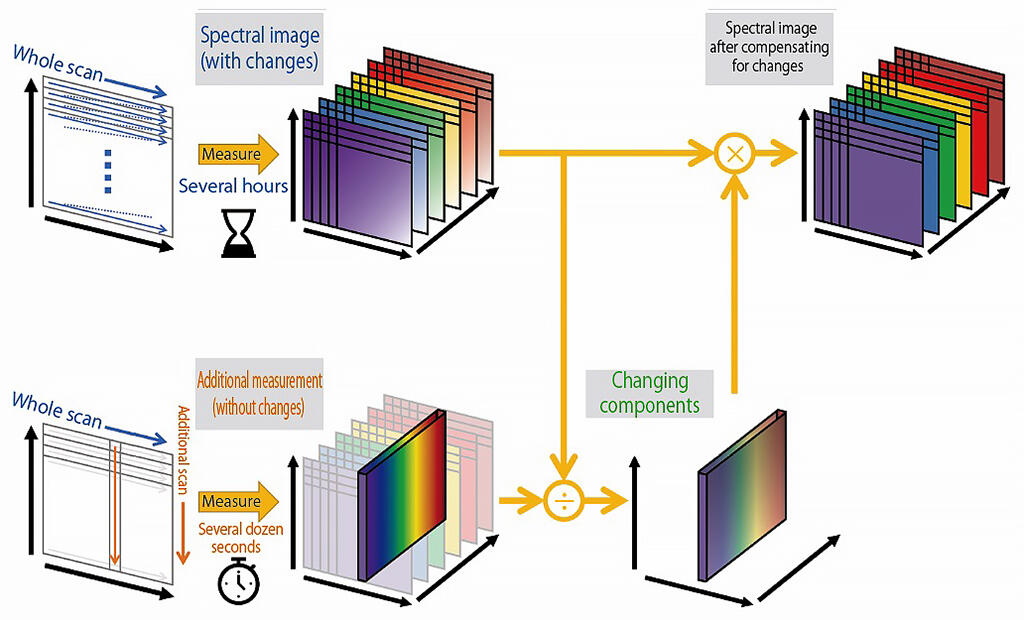

For this imaging, the group used a measuring system made up of a high-resolution spectrometer and a spinning mirror system. It is capable of high-precision spectral imaging with a resolution of less than 1 nanometer and a range of 400-2,500 nanometers (nano = 1/1 billion). However, since this method involves using each element of the image as a scanning line and scanning these in order starting from the top of the image, it takes several hours to measure the image as a whole. Consequently, it is difficult to accurately measure the colors of the stained glass through which external light passes in an environment where the weather and the height of the sun change with each passing moment.

Thus, as they were scanning the glass from the top, Mukaigawa and his colleagues ran an additional vertical scan with just a single scanning line. Mukaigawa explained the reasons for their outcomes: "Doing this meant we were able to obtain a double image: the spectral image affected by any changes and additional measurements that were not affected by these. Comparing the two gave us the components that changed due to the natural light, and we were able to obtain images calibrated to cope with the effects of the changes by removing these components from the changing spectral images." They also developed a method to analyze the higher-dimension data obtained with this technique in a short period of time. The group achieved the digital archiving of the stained glass with its colors unaffected by the changes in external light (Figures 5 and 6).

Figure 5: The spectral imaging of the stained glass at Amiens Cathedral

Figure 6: Obtaining spectral images that compensate for changing natural light

The group believes that research on plenoptic measurement has applications in all sorts of fields, from food to automobiles, medicine and cultural assets. Mukaigawa, who is from Nara and therefore has always felt close to Japan's cultural assets, hopes to move forward with applications in the field of cultural assets in particular. The group has already succeeded in making the characters of old texts easier to read by removing the "see-through" effect in the lab. "I'd like to use our achievements in World Heritage digital archiving as a foothold to someday work on Nara's cultural assets, including the Kitora Kofun murals," mused Mukaigawa.

(TEXT: Yuko Sakurai, PHOTO: Hideki Ishihara)