Artificial intelligence (AI) was a hot topic of discussion around the world in 2023. A major impetus was the rapid increase in the use and application of generative AI, such as "ChatGPT," in various countries, triggering its boom. New rules have also been created in the Group of Seven (G7) industrial nations and other countries. The G7 leaders finally agreed on the International Guiding Principles for Organizations Developing Advanced AI Systems based on the "Hiroshima AI Process" for the use and regulation of AI, at an online meeting held on December 6, 2023. The guidelines clearly describe the responsibilities to be upheld by "all stakeholders," from developers to users, and present a comprehensive set of international rules.

Generative AI is being used in various contexts by millions of people worldwide. However, this has led to the emergence of a serious issue in the form of false information, fake videos, and fake news created by generative AI and continuously spread on social networking sites (SNS), causing misjudgment and confusion in society. The government of Japan and the governments of other countries are taking a serious view of this situation and urging nations to implement effective countermeasures. In 2024, discussions will continue in advanced countries such as Japan and the European nations toward coexisting with "safe, secure, and trustworthy AI."

(Provided by the Cabinet Public Relations Office)

The rapid adoption of ChatGPT has led to a mix of expectations and concerns.

ChatGPT is an interactive software and a representative generative AI released to the public in November 2022 by the U.S. startup company OpenAI. Based on AI-learned data from the vast amount of data on the Internet, ChatGPT creates and provides text, images, or audio in response to user requests. In 2023, Open AI successively released improved versions of ChatGPT. Simultaneously, other companies in the field of artificial intelligence also introduced their own generative AIs, sparking an "era of fierce competition in generative AI."

With the rapid increase in the number of users of generative AI, related services have also expanded and quickly spread around the world. The expectations from this tool have increased as it can be used in a number of fields, including business, government, education, and more. However, in the learning process to create generative AI, problems arose when articles, papers, images such as photographs, and personal data began to be used without permission. In addition to copyright infringement, the spread of false information and fake videos has become a common social issue worldwide. The increasing use of ChatGPT by a number of companies and local governments in Japan has led to heated debates over the pros and cons of its usage, with a number of universities warning students against its casual use.

In July of last year, the Ministry of Education, Culture, Sports, Science and Technology released a set of guidelines outlining usage and precautions with regard to generative AI in elementary, junior high, and high schools. While the guidelines provide examples of the effective use of AI such as in group discussions and in learning programming, they consider the use of AI in testing and composition as inappropriate. Experts in the field of education hold differing views on its use in education, with many voicing concerns about the negative impact of generative AI on fostering critical thinking skills and creativity.

As ChatGPT became widely popular, expectations and concerns about generative AI began to intersect. European countries, in particular, were quick to initiate regulatory measures. The G7 also took a serious view of the situation. During the G7 summit chaired by Japan in Hiroshima in May 2023 (Hiroshima Summit), the decision to establish the Hiroshima AI Process was taken. The work progressed as Japan took the lead in organizing related international conferences.

(Provided by Open AI)

Clearly stating that 'human rights and human-centricity should be respected'

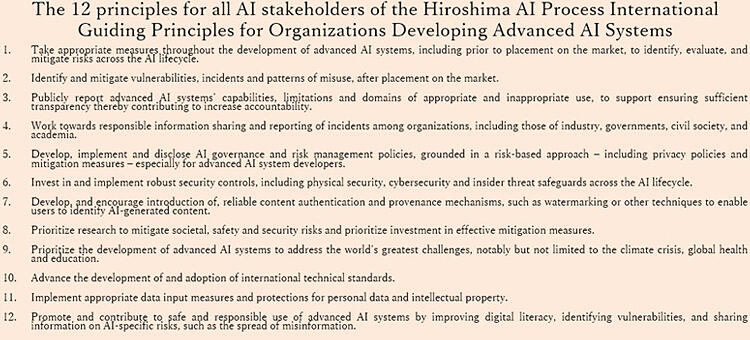

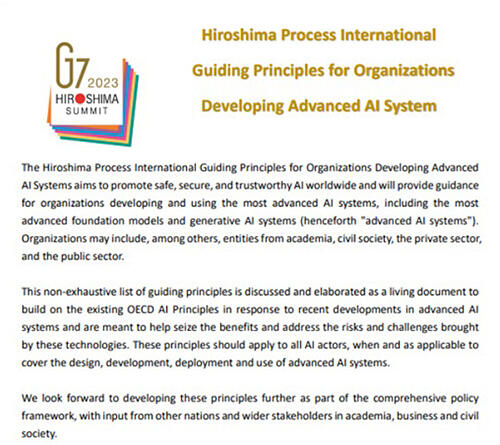

The G7 held an online Digital and Tech Ministers' Meeting on December 1, 2023. They compiled international guiding principles based on the Hiroshima AI Process and adopted a ministerial statement. The meeting was attended by members of Japan's Ministry of Internal Affairs and Communications, Ministry of Economy, Trade and Industry, and Digital Agency, as well as members of international organizations such as the Organisation for Economic Co-operation and Development (OECD). The members finally agreed on these guiding principles during the summit on June 6, 2023. This marked the establishment of a comprehensive set of international guiding principles on AI for the first time. Although they are not legally binding, they are expected to influence the formulation of rules for the use and regulation of AI in each country in the future.

The most significant feature of these international guiding principles is that they target not only developers and creators but also "all AI stakeholders," including users. The guidelines comprise the main body of rules for stakeholders and a code of conduct that includes specific measures to be followed by developers. Both the guidelines and the code of conduct consist of 12 principles each.

The main body of the guidelines clearly states that it "aims to promote safe, secure, and trustworthy AI worldwide and will provide guidance for organizations developing and using the most advanced AI systems, including generative AI systems." It also states that AI "should respect human rights, diversity, fairness and non-discrimination, democracy, and human-centricity." Additionally, it calls for (a) the implementation of appropriate measures to assess and mitigate risks associated with AI, (b) the introduction of measures to protect personal data and intellectual property and prevent misuse, and (c) the development of technologies that enable users to identify AI-generated content.

The code of conduct prescribes sharing of information among related organizations and reporting of incidents to society in order to improve the security and reliability of AI systems; promoting the development of technologies such as "electronic watermarking" to identify content created by generative AI; and prioritizing research to prevent bias and misinformation.

Provided by the Ministry of Foreign Affairs/Prime Minister's Office of Japan

Provided by the Ministry of Foreign Affairs

Europe, the United States, and Japan have established their own rules

In response to the agreement by the G7 leaders on the international guiding principles, each country is now in the final stages of formulating its own rules. The European Union's (EU) emphasis on ethical issues related to AI has been instrumental in the EU leading regulatory initiatives. According to Kyodo News in Brussels, on December 09, 2023, the EU reached a broad agreement on a comprehensive AI regulation bill, including generative AI. While the G7 international guiding principles are not legally binding, the EU bill incorporates sanctions and is enforceable.

If an agreement is reached, the regulation is expected to be implemented as early as 2026 and will be the world's first comprehensive AI regulation. Violations are punishable by fines of up to €35 million (approximately 5.5 billion yen) or 7% of the annual turnover in the most severe cases. While the EU is committed to the development of AI, as are the United States, China, and other countries, it is also deeply concerned about the negative impact of AI on human rights and democracy. Hence, it has formulated specific legal and regulatory measures with remarkable swiftness.

The movement to regulate AI is a global trend. In October 2023, U.S. President Joe Biden signed an executive order requiring developers of advanced AI systems to share information that could pose risks to national security. The British government hosted an 'AI Safety Summit' in early November 2023 and adopted the 'Bletchley Declaration on AI Safety.' Prime Minister Fumio Kishida also participated in the AI Safety Summit online, and 28 countries, including Japan, the United States, China, and the EU, agreed to adopt the declaration. They confirmed the policy of strengthening international collaboration to anticipate the risks of AI and prevent its misuse.

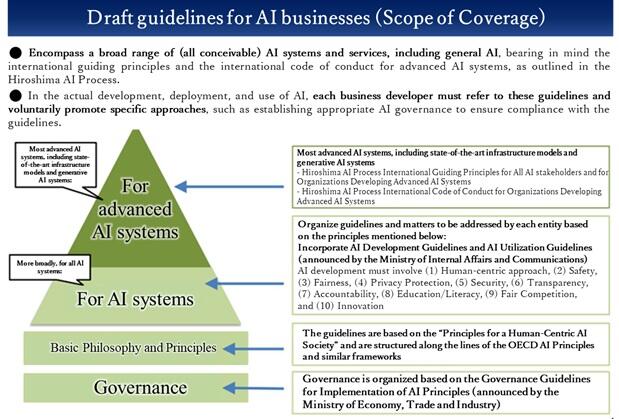

In Japan, discussions on the use and regulatory measures for AI have been conducted primarily through the government's AI Strategy Council, involving ministries such as the Ministry of Internal Affairs and Communications and the Ministry of Economy, Trade and Industry. On December 21, 2023, the government held a meeting for the same purpose and presented draft guidelines for AI-related businesses. These guidelines, centered around 10 principles and calling for consideration of human rights and measures against misinformation and fake videos, align with the G7 international guiding principles. The government aims to solicit public opinion in early 2024 and officially announce the guidelines by March. Prime Minister Kishida, who attended the meeting, announced his plan to establish an "AI Safety Institute" by January 2024 to advance research on methods for evaluating AI safety.

Provided by the Prime Minister's Office/Cabinet Public Affairs Office

Provided by the Ministry of Internal Affairs and Communications, Ministry of Economy, Trade and Industry

Pressing need for measures to prevent issues of misuse and damage

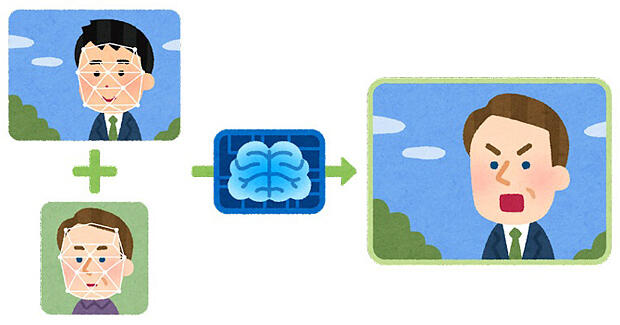

Among the various types of AI misuse, the most concerning issue presently is the creation of fake videos known as 'deepfakes.' The dangers of deepfakes have been a concern for several years, but the spread of fake videos has sharply increased worldwide since 2022 with the proliferation of generative AI. This has become a matter of increased interest and concern within the international community.

To cite an example, after Russia's invasion of Ukraine, a fake video of the Ukrainian president, Volodymyr Zelensky, calling on his soldiers to lay down their weapons began to be circulated widely online. In a relatively recent instance, a video believed to be fake was circulated amid the ongoing war between the Islamic organization Hamas and the Israeli military in the Palestinian territory of Gaza. Fake videos related to the 2024 U.S. presidential election have already begun to be posted.

In Japan, in early November, a fake video comprised of Prime Minister Kishida's voice and image where he is making sexual remarks was widely circulated on SNS. The government has decided on a policy to promote measures to prevent misuse of AI in collaboration with relevant ministries and agencies and continues to discuss the issue in the AI Strategy Council and other forums.

Identifying fake videos that are created using generative AI technology is not easy. However, to counter advanced technology, the only option is to employ more advanced technologies. The key to developing countermeasures lies in technological advancement.

In April, a group from the Graduate School of Information Science and Technology at the University of Tokyo announced that they had developed an advanced technique to detect deepfakes at the world's highest level, thus drawing global and domestic attention. The group claims that the method can detect even the minutest trace of forgery. Currently, many major global AI companies including Microsoft are rushing to develop detection software.

The international guiding principles that were agreed on by the G7 also include promoting measures to address issues such as the spread of misinformation, including fake videos; however, effective and concrete preventive measures remain a challenge for the future. The misuse of generative AI is a global issue that cannot be solved by a single country alone. Countries need to exchange research and development on technologies and related information to the maximum extent possible, and international collaboration is crucial. The world's wisdom must be collectively used to find concrete measures to prevent the damage caused by AI and its misuse.

The advent of generative AI has forced people to reconsider the various issues related to AI. One cannot ask generative AI how to use AI wisely and achieve harmonious coexistence and mutual prosperity with AI. Humans need to reflect on this issue. The year 2023, which saw the boom of generative AI, is soon ending. The year 2024 will require humans to rethink calmly on how humans and AI should coexist based on international guiding principles and harness human wisdom to use AI for the benefit of mankind.

(UCHIJO Yoshitaka / Science Journalist, Kyodo News Visiting Editorial Writer)

Original article was provided by the Science Portal and has been translated by Science Japan.