The 2024 Nobel Prize in Physics was awarded to John Hopfield (Princeton University, USA) and Jeffrey Hinton (University of Toronto, Canada). The award represented a recognition of discoveries and inventions that made machine learning possible using artificial neural networks, which form the basis of modern AI.

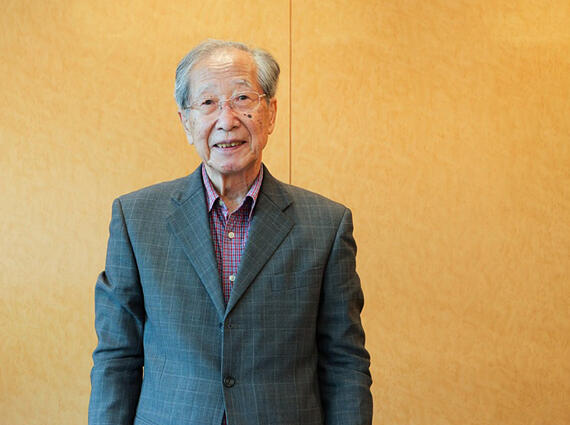

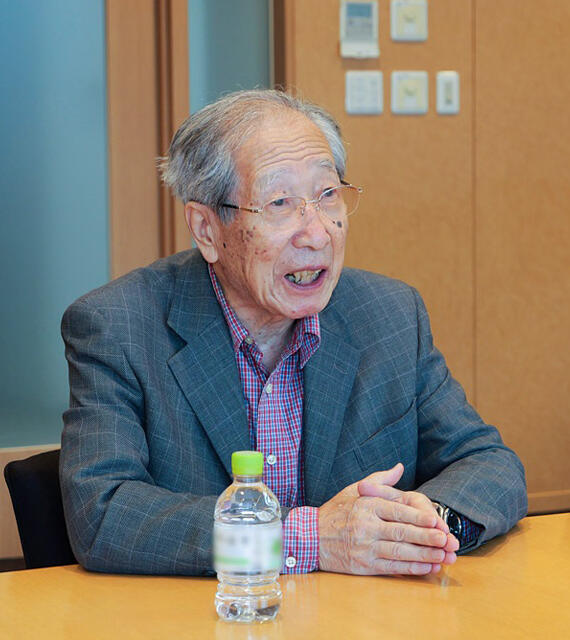

However, similar studies were conducted in Japan and were rather ahead of their time. Shunichi Amari, an honorary researcher and former director of the RIKEN Brain Science Institute, who was a key figure in such studies, commented on the announcement of the Nobel Prize in Physics. While congratulating the two winners, he added, "Artificial intelligence and neural network theory research has origins in Japan..."

In the first part of this article, Science Portal asked Dr. Amari to reflect on this "origin" and talk about how the research results that led to today's AI technology were discovered in Japan.

Theory created by an independent circle of young researchers in early 1960s

— Looking back a little on the history of AI research, can you tell us when you first became involved in it, Dr. Amari?

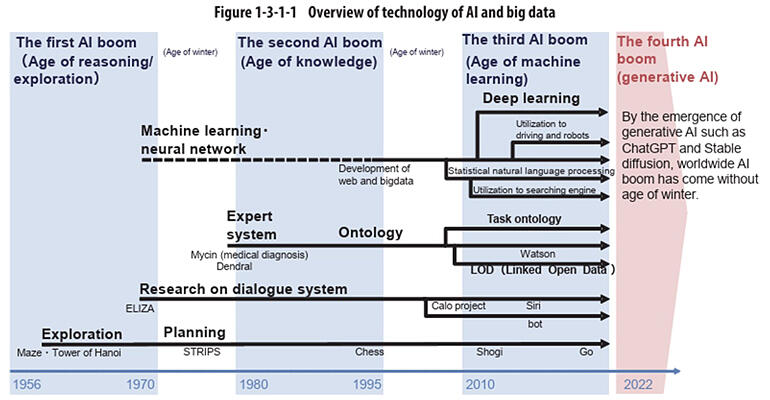

Looking back at AI research, there have been many ups and downs leading to the present.

The first research into AI aimed to achieve intelligence via programming computers. However, around the same time, there was also an idea emerging that as the human brain acquires intellectual functions through learning, it is possible to achieve human-like intellectual functions by creating an artificial neural network in a computer and making it learn.

It was the "perceptron," a brain-like learning machine proposed by psychologist Frank Rosenblatt (USA). That was in the 1950s and 1960s. In the end, today's AI is an advanced version of this perceptron.

From the early stages of this research, there was the idea that it would be best to link this to research on the brain. In other words, if we study how the brain works from the perspective of information, we may be able to learn something new about artificial intelligence.

It was 1963 when I completed my doctoral program at the University of Tokyo. I then went on to work at Kyushu University. My major was mathematical engineering. Mathematical engineering is, in essence, the idea that by examining the mechanisms of all things in the world using mathematical ideas, we can theoretically explain them. So, the subject can be anything. I also did research on various subjects.

When I took up my post at Kyushu University, Rosenblatt's perceptron was just starting to attract attention. I was also fascinated by the idea that if we could program something similar to a neural network inside a computer and have it learn, that it could then recognize patterns. So, I formed an interdisciplinary research circle with young researchers specializing in mathematics, saying, "Let's study perceptron." This was my first research directly related to AI.

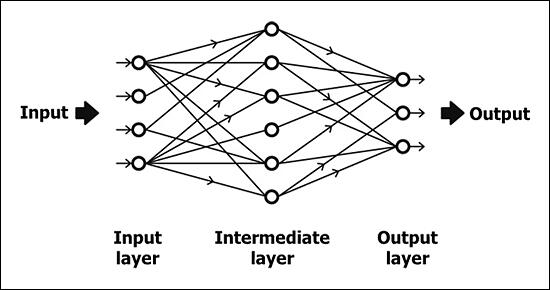

Created by the Science Portal Editorial Office based on Mr. Amari's original drawing

We came up with a system that allowed learning in the intermediate layer

— I believe that the "stochastic gradient descent method," which led to the research achievements recognized by the Nobel Prize in Physics, was developed in that independent research circle. Could you please explain this briefly?

Yes, this is correct. The Rosenblatt's model of a learning machine comprises three layers: an "input layer" that receives input signals, an "intermediate layer" that transforms information, and finally an "output layer" that makes decisions. Learning takes place only in the last layer, and the intermediate only processes information.

In response to this issue, we came up with a model in which the intermediate layer also learns. So, how can we ensure that machines can learn in the intermediate layer as well? At that time, artificial neurons (modeled after neurons in the brain) were of zero-one type, either on or off. If answer was wrong, elements in the output layer were modified to allow neurons to learn.

So, we came up with the idea of an "analog neuron," and instead of a neuron being either "on" (1) or "off" (0), we thought that it would be better if it could receive and output continuously changing analog quantities such as "3.61" or "0.12." We thought that this way, the intermediate layer could learn, and machine learning could be conducted better. Inputs and outputs in the neurons of the real brain are also actually analog.

We can incorporate this mechanism into a neural network model. If answer is incorrect during learning, the analog value of received information at the connection point is slightly changed, either in the final output layer or intermediate layer. This will change the final answer a bit. When you change many parameters little by little, you can see how answer changes by performing differentiation. By making changes so that the answer becomes closer to the correct one, learning will progress rapidly. In mathematics, this is called a "gradient." This concept was summarized in a thesis which was published in 1967. Unfortunately, however, public enthusiasm for AI waned as technology proved impossible, and by 1970s, research into it had gone downhill worldwide.

Origins of this research "also" in Japan

— Then came the second boom in the late 1970s and 1980s.

In 1986, a paper on "back propagation" (error back propagation) was published, and the idea of machine learning was exactly the same as our idea presented in the 1967 paper. However, computer performance had improved considerably; therefore, computer simulations could be done on a large scale, and this gave momentum to the second boom. However, the theory we created was completely forgotten.

Meanwhile, there was a growing movement in Japan at that time to link brain science with mathematical information science to promote research on the brain from a mathematical perspective. Then, led by Professor Masao Ito of the University of Tokyo, a project was started to organize a large-scale brain research group. They said that theoretical researchers should be included in the group, so I joined too.

The trend toward mathematically studying the workings of the brain continued unabated in Japan throughout 1960s and 1970s. I returned to the University of Tokyo and began to work in earnest on the neural circuits of the brain. Kunihiko Fukushima, who was also the subject of considerable discussion in relation to this year's Nobel Prize, worked at the NHK Science and Technology Research Laboratories (NHK Giken), where he studied the workings of the brain.

Several researchers, including Mr. Fukushima and myself, took the lead in studying the brain model. At the time, Japan was proposing various models of neural circuits and was probably the most advanced country in this field. In relation to AI, we came up with the "associative memory" model in 1970s. How does the brain remember and recall things? Hypothesizing that the brain brings to mind related information in the same way as it makes associations, I created a model of associative memory and formulated it mathematically.

Interestingly, associative memory and stochastic gradient descent have led to the achievements recognized by this year's Nobel Prize in Physics. Although not directly connected, they re-discovered our ideas and advanced them, thereby developing AI today.

Actually, if you look at Hopfield's paper, it cites my paper. However, there are citations to papers that are not directly related. My paper on associative memory was not directly cited, but a subsequent paper I wrote on self-organization was cited. I don't know what type of mistake led to such a citation. But, that's just the way it happened.

— In your comment about the Nobel Prize in Physics, you wrote that "Its origins are in Japan as well." What is the meaning of this?

One of the sources of research recognized by this year's Nobel Prize in Physics is the stochastic gradient descent method. It seems like an obvious method when you think about it, but I was the first to say that the neural network perceptron could be trained using stochastic descent.

As I mentioned earlier, an identical model to the Hopfield's associative memory model was already published in Japan. The mechanism of deep learning, which processes data by deeply stacking intermediate layers, is basically the same as "neocognitron" devised by Mr. Fukushima. This research was already conducted in Japan around 1978.

That's why I wrote that "Its origins are in Japan as well." However, when I was drafting that message, I was little unsure whether I should write "Its origins are in Japan" without the "as well." But, I still thought that would be disrespectful. Because before Japanese research, there were giants such as Rosenblad, and our research was influenced by them. That's why I thought it would be appropriate to use the expression "in Japan as well."

(provided by RIKEN)

Profile

Shunichi Amari

Honorary Researcher at RIKEN and former Director of the Brain Science Institute.

Professor emeritus at the University of Tokyo.

Specially Appointed Professor at the Teikyo University Advanced Comprehensive Research Organization.

Born on January 3, 1936. He is interested in mathematical engineering in general and has previously studied the circuit network theory using the topological geometry, continuum mechanics (physical space theory) using differential geometry, information theory, learning and pattern recognition, and neural network theory. In recent years, he has advocated "information geometry," a common theoretical foundation for statistics, systems theory, and information theory and is building a system of information mathematics based on it. He is also known internationally as a pioneer in the field of AI research. He has received numerous awards, including the Japan Academy Prize, Order of the Sacred Treasure, Person of Cultural Merit, and Order of Culture.

(UTSUGI Satoshi / Science writer)

Original article was provided by the Science Portal and has been translated by Science Japan.